In a 1999 book titled Age of Spiritual Machines, Ray Kurzweil proposed the theory of The Law of Accelerating Returns.He stated that:

And we couldn’t agree more after witnessing clear signs of this being true in artificial intelligence (AI).

Reflecting on the past twelve years, it’s striking to consider that the rate of innovation in AI has surpassed its entire history and the landscape of AI has marked some unprecedented advancements and permanent paradigm shifts.

Thus, we intend to take a retrospective look at some of the most pivotal and defining breakthroughs that have shaped AI from 2012 to early 2024 and helped us get to where we are today.

This is part 1 of the two-part AI review series:

Part 1: 2012 to 2017 (this post)

Part 2: 2018 to 2023 (will be published on Thursday).

Let’s begin!

2012 and 2013

In 2012, the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) marked a significant milestone in computer vision. The challenge aimed to evaluate AI algorithms for object detection and image classification.

While ImageNet held two challenges before 2012 (in 2010 and 2011), it was 2012’s challenge that specifically prompted the development of deep learning models.

One of the key breakthroughs from 2012 was AlexNet, a deep convolutional neural network (CNN) developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton.

More specifically, AlexNet revolutionized image classification tasks by significantly reducing the error rates (the lower the better) compared to previous approaches.

Its success was attributed to its deeper architecture, consisting of eight layers, including five convolutional layers and three fully connected layers, as shown in the image below from the original paper.

AlexNet’s remarkable victory demonstrated the potential of deep learning and sparked interest in building deeper and more complex neural networks.

What’s fascinating is that the success of AlexNet also paved the way for the widespread adoption of deep learning in other domains.

Researchers and practitioners began to explore deep neural networks in various fields, including natural language processing (NLP).

And that’s when we saw a major breakthrough in 2013.

Tomas Mikolov and a team of researchers at Google introduced Word2Vec, a technique for learning word embeddings from large text corpora.

The model was intended to represent words as dense vectors in a continuous vector space, and it could be trained using either the Continuous Bag of Words (CBOW) or Skip-gram architectures:

The embeddings produced proved to be a game-changer in NLP as they captured semantic relationships between words.

For instance, at that time, an experiment showed that the vector operation (King - Man) + Woman returned a vector near the word Queen.

That’s pretty interesting, isn’t it?

In fact, the following relationships were also found to be true:

Paris - France + Italy ≈ Rome

Summer - Hot + Cold ≈ Winter

Actor - Man + Woman ≈ Actress

and more.

This improved the performance of many NLP tasks, such as language modeling, machine translation, etc.

Their utility is pretty evident from the fact that the Word2Vec paper today has over 40,000 citations.

Moving on, some early signs of GenerativeAI also date back to 2013, when variational autoencoders (VAEs) were introduced.

VAEs are a type of generative model that can learn to generate new data points, such as images or text, by capturing the underlying distribution of the input data.

Unlike traditional autoencoders, which are used for dimensionality reduction, VAEs can generate new data points that resemble the training data, primarily due to their ability to learn a probabilistic distribution of the latent space.

This approach marked a significant step forward in generative modeling, and next year itself — 2014, generative adversarial networks (GANs) were first introduced by Ian Goodfellow.

2014

GANs consisted of two neural networks, the generator and the discriminator, which were trained simultaneously through a competitive process.

The generator was tasked with creating new data samples that were indistinguishable from the training data, while the discriminator aimed to differentiate between real and generated samples.

This adversarial training process encouraged the generator to improve its ability to generate realistic samples, leading to the creation of high-quality synthetic data.

For instance, this is a popular random face generator website which is powered by a variant of GAN:

That’s the quality of synthetic data GANs were capable of generating.

Since 2014, GANs have been successfully applied to various tasks, including image generation, image-to-image translation, and even the generation of music and text.

In addition to the introduction of GANs, 2014 was also the year when DeepMind gained widespread attention for its groundbreaking research in reinforcement learning and neural networks.

They published a paper called “Playing Atari with Deep Reinforcement Learning,” which demonstrated how a neural network could learn to play classic Atari video games at a superhuman level.

In this paper, the researchers introduced a novel algorithm called Deep Q-Network (DQN), which combined deep neural networks with Q-learning (a popular reinforcement learning algorithm).

This achievement was a significant milestone in AI, demonstrating the power of deep reinforcement learning to tackle challenging problems in various domains.

It also helped propel DeepMind into the spotlight, setting the stage for its subsequent achievements and eventual acquisition by Google in 2014 for a reported $500 million.

2015

In 2015, two groundbreaking advancements reshaped the landscape of deep learning:

Residual Networks (ResNets)

Residual Networks, or ResNets, were introduced by Kaiming He et al. in their paper “Deep Residual Learning for Image Recognition.”

ResNets addressed a fundamental problem in training very deep neural networks — the vanishing gradient problem.

The issue with traditional deep learning networks was that as these networks become deeper, it becomes increasingly difficult for gradients to propagate back through the network during training, especially in the initial layers.

Residual Networks (or ResNets) solved this problem.

At the core of ResNets was the concept of residual learning, which was facilitated by introducing skip connections in the network. This allowed gradients to bypass one or more layers.

This simple yet powerful concept fundamentally changed how deep networks were designed and trained, leading to significant improvements in performance and enabling the development of deeper and more efficient models.

Attention mechanism

Yes, the attention mechanism existed before the Transformer architecture was introduced in 2017 (more on this shortly).

For more context, note that recursive neural networks (RNNs) and long short-term memory (LSTMs) networks were introduced way before 2015.

Ever since deep learning became increasingly popular in 2012, these networks found wide applicability in language-related tasks.

However, a key challenge emerged in effectively capturing long-range dependencies within sequences. These models struggled to maintain contextual information across lengthy sequences, leading to suboptimal performance, especially in tasks like machine translation and text summarization.

Another major issue was their inherent sequential processing, which inhibited parallelism.

This meant that RNNs and similar models processed one token at a time, making them computationally intensive and slow, especially for longer sequences.

The concept of attention in neural networks was introduced by Dzmitry Bahdanau et al. in their paper “Neural Machine Translation by Jointly Learning to Align and Translate” to improve the performance of sequence-to-sequence models in tasks such as machine translation.

It proficiently captured long-range dependencies in the text by selectively attending to relevant parts of the input sequence:

As a result, it led to significant performance gains in various NLP tasks.

Back then, attention mechanisms were used in conjunction with existing architectures like RNNs and LSTMs, and they showed significant improvements compared to their non-attention counterparts.

Nonetheless, they still suffered from limitations such as computational inefficiency because of the limited parallelism of the underlying neural network architecture.

The breakthrough came in 2017 with the introduction of the Transformer architecture by Google. They entirely eliminated the need for recurrent architectures in sequence modeling. We shall get back to this shortly.

Moving on, in 2015, OpenAI was founded as a non-profit artificial intelligence research organization with the mission to democratize AI research and ensure that artificial general intelligence (AGI) benefits all of humanity, and it quickly became a prominent player in the AI research community.

While OpenAI did not release any major research papers or AI systems in 2015, its formation generated significant excitement and interest within the AI community.

2016

AI witnessed several significant advancements across various domains in 2016. Some of the key developments during this period include (in no particular order):

AlphaGo’s Victory: One of the most notable events of 2016 was the victory of Google DeepMind’s AlphaGo over Lee Sedol, one of the world’s best players of the ancient board game Go. This demonstrated the capabilities of AI in mastering complex games that were previously thought to be exclusive to human expertise.

WaveNet and WaveRNN: DeepMind (owned by Google at this time) introduced WaveNet in 2016, a deep generative model for raw audio waveforms. WaveNet revolutionized speech synthesis and audio generation by producing more natural-sounding speech than traditional methods. Moreover, they also developed WaveRNN — a simpler, faster, and more computationally efficient model that could run on devices, like mobile phones, rather than in a data center. Going ahead, both WaveNet and WaveRNN became crucial components of many of Google’s best-known services, such as Google Assistant, Maps Navigation, Voice Search, and Cloud Text-To-Speech.

2017

2017 was a big year for NLP.

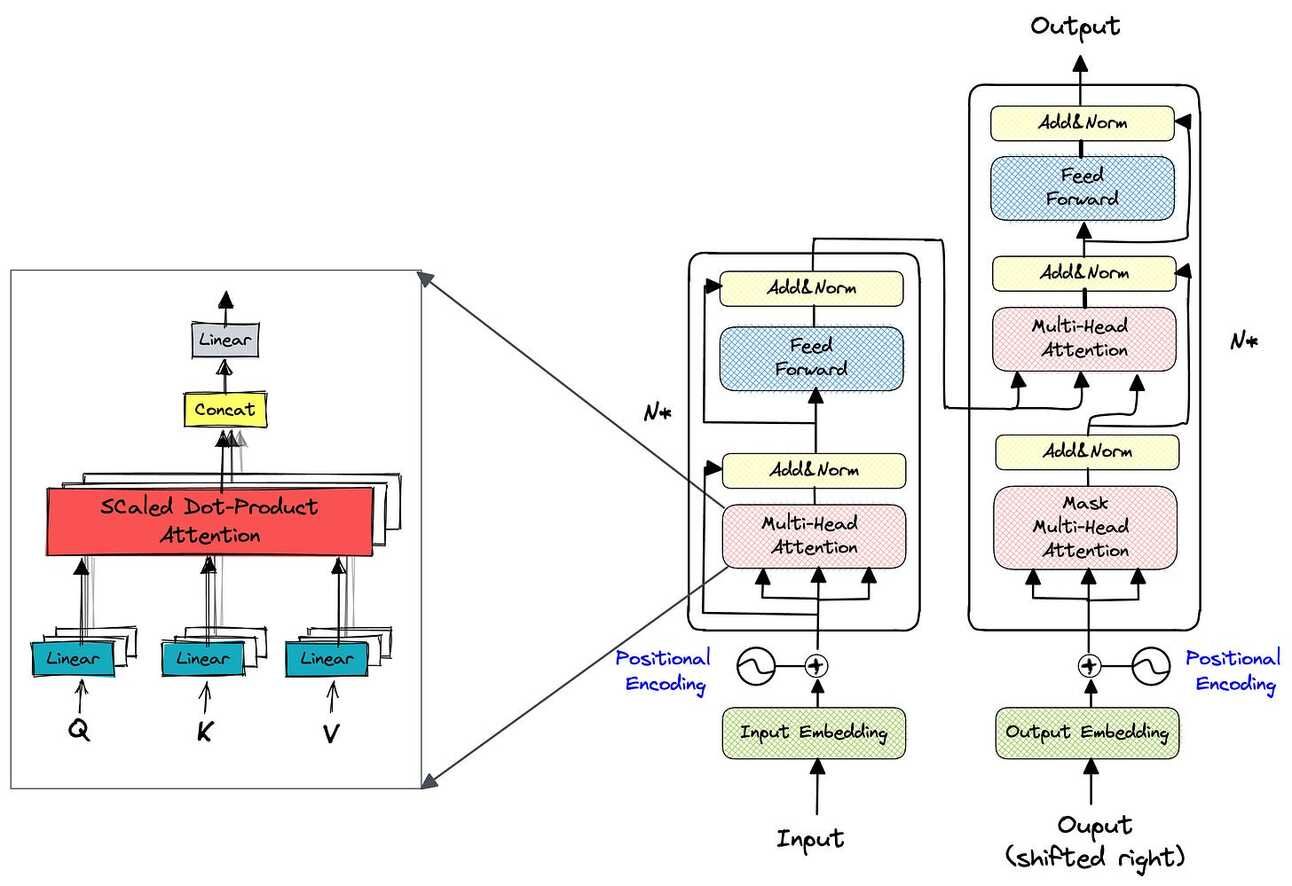

This was due to the introduction of the Transformer architecture in the paper “Attention is All You Need,” which fundamentally changed how researchers approached sequence modeling.

Unlike traditional sequence-to-sequence models that relied on recurrent neural networks (RNNs), the Transformer architecture used self-attention mechanisms to draw global and long-range dependencies between input and output without sequential processing.

Since then, Transformers have made RNNs and LSTMs almost entirely obsolete for NLP tasks, and this one event laid the foundation for the generative AI breakthroughs we are witnessing today.

This ability to capture long-range dependencies proved to be pretty crucial for tasks like machine translation, where the meaning of a word in one language may depend on words that appear much later or earlier in the sentence.

The Transformer architecture quickly became the state-of-the-art model for various NLP tasks, including machine translation, text summarization, and language modeling.

Furthermore, the success of the Transformer architecture highlighted the importance of attention mechanisms in deep learning, inspiring further research into novel architectures that leverage attention mechanisms for various tasks beyond NLP.

Part two of this article is available here:

If you loved reading this one, feel free to share this article with friends:

Thanks for reading!