In part 1 of this two-part AI review series, we looked at the most prominent research in AI from 2012 to 2017.

Today, we shall continue with part 2 and discuss the developments that took place from 2018 to 2023.

Let’s begin!

2018

In 2018, the momentum from the introduction of the Transformer architecture in late 2017 continued to drive significant advancements in NLP and machine learning.

Two key papers that originated from the Transformer architecture and had a profound impact on the field were “Bidirectional Encoder Representations from Transformers (BERT)” and “Improving Language Understanding by Generative Pretraining (GPT-1).”

BERT

Recall our earlier discussion in 2013 on word embeddings.

Those embeddings genuinely showed some promising results in learning the relationships between words.

For instance, at that time, an experiment showed that the vector operation (King - Man) + Woman returned a vector near the word Queen.

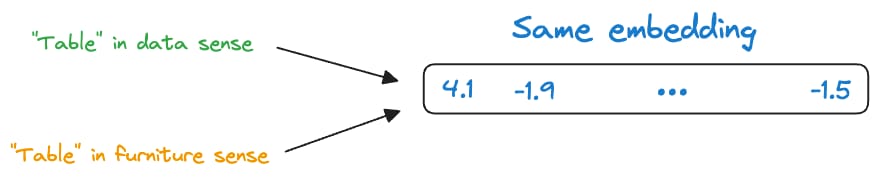

So, while these embeddings captured relative word representations, there was a major limitation.

Consider the following two sentences:

Convert this data into a table in Excel.

Put this bottle on the table.

Here, the word “table” conveys two entirely different meanings:

The first sentence refers to a “data” specific sense of the word “table.”

The second sentence refers to a “furniture” specific sense of the word “table.”

Yet, embedding models proposed in 2013 were static, meaning they assigned the same representation to a word, irrespective of the context:

In other words, these embeddings didn’t consider that a word may have different usages in different contexts.

BERT, developed by Google AI, addressed this limitation by introducing two new pretraining techniques for language representation.

These pre-training objectives were masked language modeling (MLM) and next sentence prediction (NSP), which helped BERT generate context-aware embeddings.

In MLM, BERT was trained to predict missing words in a sentence. To do this, a certain percentage of words in most (not all) sentences were randomly replaced with a special token, [MASK].

BERT then processed the masked sentence bidirectionally by considering both the left and right context of each masked word, which is why it was named “Bidirectional Encoder Representation from Transformers (BERT).”

For each masked word, BERT predicted the original word given its context. It did this by assigning a probability distribution over the entire vocabulary and selecting the word with the highest probability as the predicted word.

In NSP, BERT was trained to determine whether two input sentences appeared consecutively in a document or were randomly paired sentences from different documents.

During training, BERT was given pairs of sentences as input. Half of these pairs were consecutive sentences from the same document (positive examples), and the other half were randomly paired sentences from different documents (negative examples).

BERT was then trained to predict whether the second sentence follows the first sentence in the original document (label 1) or whether it was a randomly paired sentence (label 0).

With consistent training, the model learned how different words related to each other in sentences.

This learning process helped BERT create embeddings for words and sentences, which were contextualized, unlike earlier embeddings like Glove and Word2Vec:

GPT-1

In 2018, OpenAI introduced the Generative Pretrained Transformer 1 (GPT-1), a large-scale open-source language model based on the Transformer architecture.

GPT-1 represented a significant advancement in NLP by demonstrating the capabilities of large-scale generative models in generating coherent and contextually relevant text.

GPT-1 was trained on a diverse range of text sources, including books, articles, and websites, allowing it to learn the intricacies of natural language.

The model consisted of 117 million parameters and was trained using a simple objective: predict the next word in a sentence given the previous words.

Despite its simplicity, GPT-1 was able to generate text that was remarkably coherent and contextually relevant, showcasing the potential of generative models in NLP.

One of the key innovations of GPT-1 was its ability to generate text in a variety of styles and tones, making it a versatile tool for tasks such as text generation, summarization, and dialogue. The model could also be fine-tuned on specific datasets for more specialized tasks, further extending its capabilities.

While GPT-1 was not as sophisticated as later iterations in the form of GPT-2, GPT-3, and GPT-4, it laid the groundwork for the development of large-scale language models based on the Transformer architecture.

2019

Building on the success of BERT in 2018, researchers introduced several variants and improvements to the model in 2019.

For instance, RoBERTa, from Facebook AI, was designed to address some of the limitations of BERT and further improve language representation learning.

RoBERTa was trained using a larger corpus of data and for a longer duration compared to BERT, which led to improved performance on downstream NLP tasks.

Additionally, RoBERTa utilized dynamic masking during pretraining, which helped the model learn more effectively from the input data.

ALBERT (A Lite BERT), from Google Research, aimed to reduce the computational complexity of BERT while maintaining or improving its performance.

ALBERT achieved this by factorizing the embedding layer, sharing parameters across layers, and employing cross-layer parameter sharing. These modifications allowed ALBERT to achieve state-of-the-art performance on various NLP benchmarks while being more efficient than BERT.

DistilBERT was designed to distill the knowledge from a large BERT model into a smaller, more efficient model.

For more context, DistilBERT was approximately 40% smaller than BERT, which is a massive difference in size.

Yet, it retained approximately 97% of the natural language understanding (NLU) capabilities of BERT.

DistilBERT achieved this by training a smaller model to mimic the behavior of the larger BERT model.

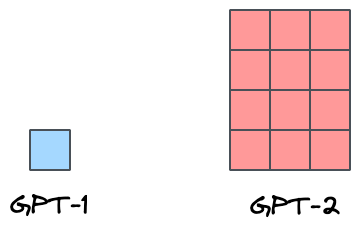

Next, in 2019, OpenAI released the Generative Pre-trained Transformer 2 (GPT-2), a highly anticipated successor to the GPT-1 model.

Similar to GPT-1, GPT-2 was also open-sourced, and it represented a significant leap in the field of NLP due to its size, scale, and capabilities.

More specifically, GPT-2 was roughly 12 times bigger than GPT-1:

GPT-1: 117 Million parameters.

GPT-2: 1.5 Billion parameters.

The release of GPT-2 also sparked debate around the ethical implications of large-scale language models. Concerns were raised about the potential misuse of such models for generating fake news, misinformation, or harmful content, which were also openly admitted by OpenAI.

2020

In early 2020, the world faced the unprecedented challenges posed by COVID-19. The pandemic disrupted lives, economies, and industries around the globe, including the field of artificial intelligence (AI).

Despite these challenges, AI development continued to advance and flourish.

Soon after the release of GPT-2 came GPT-3 by OpenAI, this one being over 115 times bigger than GPT-2.

GPT-1: 117 Million parameters.

GPT-2: 1.5 Billion parameters.

GPT-3: 175 Billion parameters.

GPT-3 represented a major milestone in NLP, making it the most prominent language model at the time of its release.

It demonstrated remarkable capabilities in generating human-like text and performing a wide range of NLP tasks, such as translation, question answering, and text generation.

One of the key innovations of GPT-3 was its training methodology, which relied on self-supervised learning. Unlike traditional supervised learning, where models are trained on labeled data, self-supervised learning involves training models on unlabeled data by predicting missing or masked parts of the input.

This approach allowed models like GPT-3 to learn from vast amounts of text data available on the internet, enabling them to acquire a broad understanding of language and context.

Moreover, what made the GPT-3 model truly remarkable was its few-shot and zero-shot learning capabilities.

Few-shot learning refers to the model's ability to perform a task with only a few examples, while zero-shot learning allows the model to perform a task without any examples at all.

This showcased the potential of large-scale language models like GPT-3 to perform a wide range of tasks with minimal supervision, opening up new possibilities for natural language understanding and generation.

Moreover, building on the success of transformers in NLP, researchers extended the transformer architecture to computer vision tasks.

Vision Transformers (ViTs) demonstrated competitive performance on image classification tasks, challenging the dominance of convolutional neural networks (CNNs) in computer vision.

ViTs offered several advantages over traditional CNNs, including increased parallelism, reduced computational complexity, and improved performance on tasks requiring global context understanding.

2021

While COVID-19 was still a concerning global issue in 2021, the field of AI continued to make significant strides, with several groundbreaking research developments that pushed the boundaries of what AI can achieve.

Some of the most prominent AI research in 2021 included:

DALL-E: OpenAI introduced DALL-E, a powerful generative model capable of creating images from textual descriptions. Building on the success of GPT-3, DALL-E demonstrated the potential of AI in creative tasks, generating visually stunning and conceptually creative images based on textual prompts.

GitHub Copilot: GitHub Copilot, developed by OpenAI in collaboration with GitHub, was an AI-powered code completion tool that assisted developers in writing code. It used GPT-3 to provide intelligent code suggestions and auto-completions, which streamlined programming for developers.

MT-NLG: MT-NLG (Multitask Natural Language Generation) was a large-scale language model developed by Microsoft Research Asia. With over 530B parameters, MT-NLG was larger than GPT-3 (175B) and was capable of performing a wide range of NLP tasks, including language translation, summarization, and text generation.

Switch Transformer: Developed by researchers at Google Research, the Switch Transformer was a novel architecture that combined the benefits of sparse and dense attention mechanisms. With over 1 trillion parameters, the Switch Transformer was one of the largest AI models ever created, showcasing the scalability and efficiency of transformer-based architectures.

2022

2022 was truly a year dedicated to Generative AI as it witnessed a significant shift towards democratization and user-friendliness.

Advancements in both NLP and computer vision (CV), coupled with the creation of user-friendly interfaces, genuinely delivered the power of generative AI into the hands of regular users, unlike any of the previous years.

DALL-E 2: Built upon the success of the original DALL-E, DALL-E 2 introduced further advancements in generative image synthesis.

Stable Diffusion: Stable diffusion leveraged diffusion models to generate high-quality images with realistic details.

PaLM: Google introduced PaLM (Parametric Large Language Model), an ambitious attempt to build one of the largest language models. PaLM aimed to surpass the then-largest model, MT-NLG, which had 530 billion parameters. Google’s PaLM was designed to be slightly larger than MT-NLG, at 540B parameters, making it the largest language model ever created.

Midjourney: Midjourney was possibly one of the most powerful text-to-image released in 2022.

But impact-wise, none of these developments came close to what ChatGPT did in late 2022, becoming the fastest user-facing product to reach 100M users ever:

While Midjourney and Stable Diffusion were accessible to general users even before ChatGPT, prompting these models to generate the desired output was still a convoluted task.

Despite their capabilities, the user experience with these models was often characterized by trial and error, requiring users to refine their prompts, which introduced friction.

Of course, this was not attributed to any modeling-related issues, but rather to the inherent complexities of the text-to-image task itself.

Generating images from textual descriptions is inherently challenging, as it requires the model to understand and interpret the nuanced details and context provided in the text to create a coherent and accurate image.

Additionally, the subjective nature of creativity and visual interpretation adds another layer of complexity, as different users may have varying expectations and interpretations of the generated images.

This success of ChatGPT can be attributed to the simplicity of the task, along with several key technical features and innovations that set ChatGPT apart from other conversational AI models:

RLHF (Reinforcement Learning with Human Feedback): ChatGPT leveraged RLHF to improve the quality of its responses over time. This allowed the model to learn from interactions with users, receive feedback on its responses, and use this feedback to adjust its behavior and generate more relevant and engaging responses in the future.

Large-scale pretraining: ChatGPT was pretrained on a vast amount of conversational data, allowing it to capture the nuances of human conversation and generate contextually relevant responses. This large-scale pretraining enabled ChatGPT to handle a wide range of topics and conversational styles, making it more versatile and adaptable to different user interactions.

Conversation-First Structure: Unlike traditional chatbots that focused on completing specific tasks or providing information, ChatGPT was designed with a conversation-first structure. This meant that the model prioritized maintaining a coherent and engaging conversation with users, rather than simply providing factual answers or completing predefined tasks.

User-Centric and Minimalstic Design: ChatGPT was designed with a user-centric approach, with a minimalist design.

2023

2023, like 2022, continued to be a year primarily dominated by GenAI, with models like LLaMA, Mistral, GPT-4, and others, which pushed the boundaries of GenAI.

We’ve already covered them in a recent issue here:

In addition to this, researchers actively explored ways to ease out building Chatbot-based LLMs and procedures for easy LLM fine-tuning after pre-training.

1) QLoRA

Fine-tuning means adjusting the weights of a pre-trained model on a new dataset for better performance.

While this fine-tuning technique has been successfully used for a long time, problems arise when we use it on much larger models — LLMs, for instance, primarily because of:

Their size.

The cost involved in fine-tuning all weights.

The cost involved in maintaining all large fine-tuned models.

LoRA (introduced in 2021) fine-tuning addressed the limitations of traditional fine-tuning.

The core idea was to decompose the weight matrices (some or all) of the original model into low-rank matrices and train them instead.

For instance, in the graphic below, the bottom network represents the large pre-trained model, and the top network represents the model with LoRA layers.

The idea is to train only the LoRA network and freeze the large model, which immensely reduces the number of trainable parameters.

QLoRA is quantized LoRA.

More specifically, Quantized Low-Rank Adaptation (QLoRA) is an improvement on the LoRA technique discussed above, which further addresses the memory limitations associated with fine-tuning large models using LoRA.

For simplicity, consider the following weight matric which has 25 Million parameters:

Typically, these 25 million parameters will be represented as float32, which requires 32 bits (or 4 bytes) per parameter. This leads to a significant memory footprint, especially for large LLMs.

This results in a memory utilization of (25 million * 4 bytes/parameter) = 100 million bytes for this matrix alone, which is 0.1GBs.

The idea in QLoRA is to reduce this memory utilization of the weight matrix using Quantization, which involves using lower-bit representations, such as 16-bit, 8-bit, or 4-bit, to represent parameters.

This results in a significant decrease in the amount of memory required to store the model's parameters.

For instance, consider your model has over a million parameters, each represented with 32-bit floating-point numbers.

If possible, representing them with 8-bit numbers can result in a significant decrease (~75%) in memory usage while still allowing for a large range of values to be represented.

2) Direct Preference Optimization

This approach simplifies the finetuning process by skipping the creation of a reward model, which is performed in Reinforcement Learning from Human Feedback (RLHF) step in models like ChatGPT.

In RLHF, the model is initially trained on a dataset containing instructions and desired responses. Human raters then provide feedback on the model's outputs, which is used to create a reward model.

The Proximal Policy Optimization (PPO) algorithm is used to adjust the model's policy toward generating higher-quality outputs based on the reward model's scores.

In contrast, DPO replaces the reward modeling and PPO steps with a single maximum likelihood estimation step. This simplifies the process significantly, making it easier to implement and less human-intensive.

The following image (from the paper) explains the difference between PPO and DPO:

As shown above, DPO directly optimizes for the policy best satisfying the preferences with a simple classification objective with the help of maximum likelihood estimation.

Summing up

Looking at the entire journey, it is quite clear that all AI breakthroughs we have seen lately (especially in the GenAI-era) aren’t quite as sudden and abrupt as they are portrayed in news and media.

Behind each milestone, there are years of research, collaboration, and incremental advancements that have collectively propelled the field forward.

Nonetheless, as we reflect on the past 12 years, the rate of progress is still pretty breathtaking and impressive.

From the early days of deep learning gaining traction to the development of massive language models like GPT-4, etc., we have witnessed AI evolve from a promising field to a transformative force.

We at AIport track all such events, so keep your eye on this newsletter to stay updated with AI news.

If you loved reading this article, feel free to share it with others:

Thanks for reading!