- AIport

- Posts

- What is Model Context Protocol (MCP)?

What is Model Context Protocol (MCP)?

The next big thing for AI Agents.

Lately, there has been a lot of buzz around the model context protocol (MCP). You must have heard about it too.

In this issue, we’ll explain what MCP is, what it solves, and why it is a big thing in the context of LLMs.

As always, we’ll refrain from overwhelming you with technical jargon and will keep everything simple and digestible.

Let’s dive in!

Analogy 1

Imagine you only know English.

To get info from a person who only knows:

French, you must learn French.

German, you must learn German.

And so on.

In this way of communication, learning even 5 languages will be a nightmare for you!

But what if you have a translator who understands all languages?

You can talk to the translator.

It can infer the info you want.

It can pick the person to talk to.

It can get you a response.

The translator is like an MCP!

It lets you (Agents) talk to other people (tools) through a single interface.

In the context of Agents, integrating a single tool/API demands reading docs, writing code—similar to learning a language.

To simplify this, platforms now offer MCP servers. Developers can plug them, and Agents can use their tools/APIs instantly.

Analogy 2

Intuitively speaking, MCP is like a USB-C port for your AI applications.

Just as USB-C offers a standardized way to connect devices to various accessories, MCP standardizes how your AI apps connect to different data sources and tools.

A technical overview

Instead of hard-wiring tools inside every app or agent, MCP:

Standardizes how tools are defined, hosted, and exposed to LLMs.

Makes it easy for an LLM to discover available tools, understand their schemas, and use them.

Provides approval and audit workflows before tools are invoked.

Separates the concern of tool implementation from consumption.

Here’s a diagram that explains the process:

The MCP server exposes some tools (check the top right segment above).

Step 1 → User sends a query input to the MCP client (which exists within Cursor or Claude). At the same time, the tools are also sent to the MCP client.

Step 2 → The MCP client sends the query and the tools to the LLM.

Step 3 → Based on the input, the LLM decides the best tool to invoke, its parameters, and returns it to the MCP client.

Step 4 → The user receives a request to approve tool use.

Step 5 → Once approved, the MCP client requests the MCP server to invoke the tool and the parameters to invoke it with.

Step 6/7 → The MCP server invokes the tool, receives the tool output, and returns it to the MCP client.

Step 8 → The tool output and the query are sent to the LLM.

Step 9 → The output is generated.

Why does it matter?

Here’s one of the reasons this setup can be so powerful:

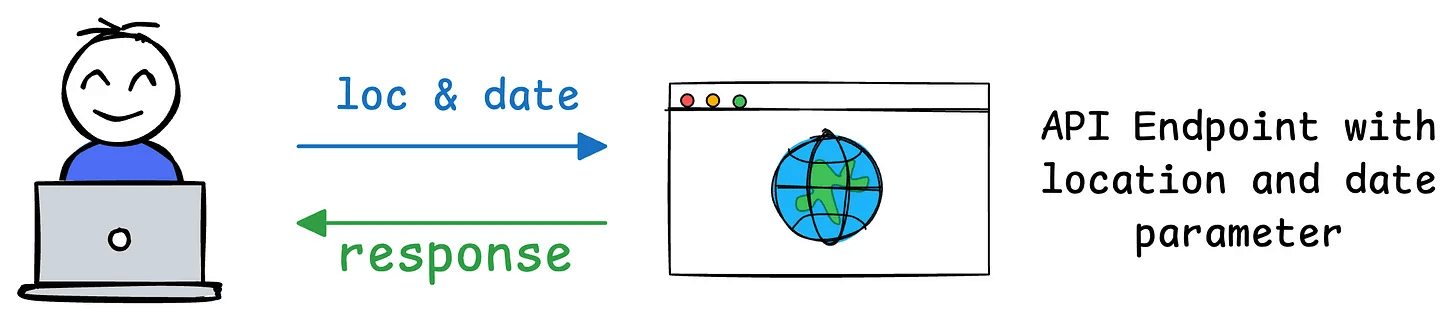

Imagine you have developed a weather API.

Under a traditional API setup:

If your API initially requires two parameters (e.g.,

locationanddate), users will integrate it with their applications to send requests with those exact parameters.

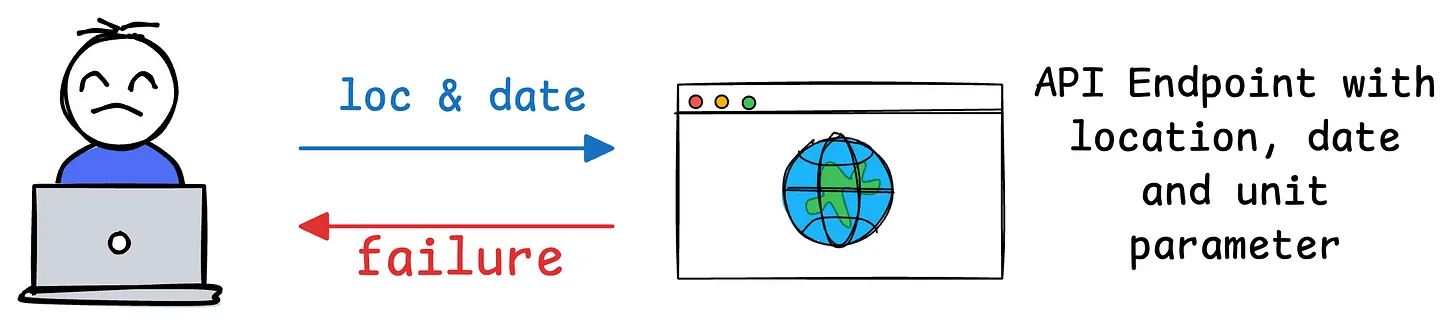

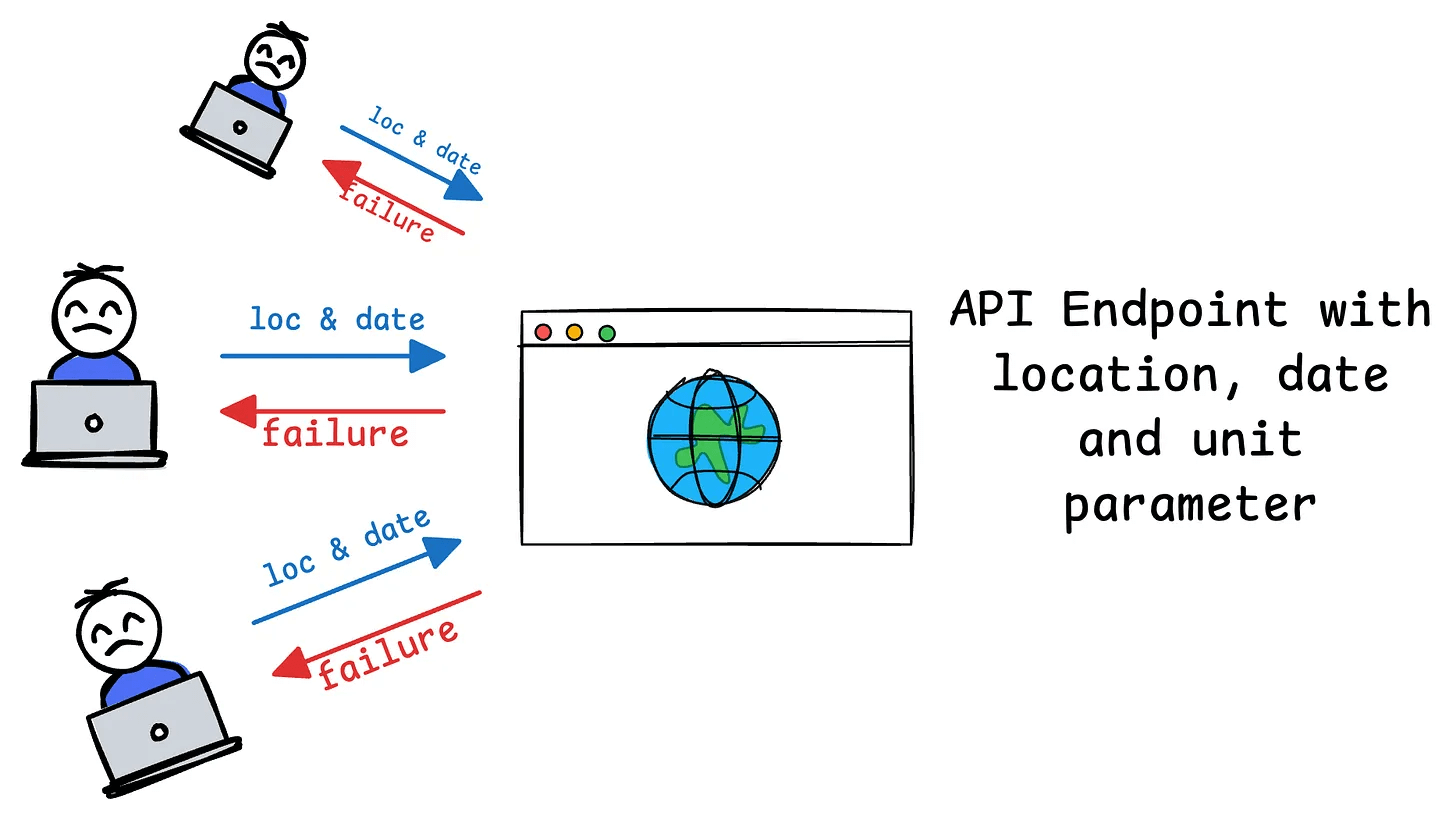

Later, if you decide to add a third required parameter (e.g.,

unitfor temperature units like Celsius or Fahrenheit), the API’s contract changes.

This means all users of your API must update their code to include the new parameter. If they don’t update, their requests might fail, return errors, or provide incomplete results.

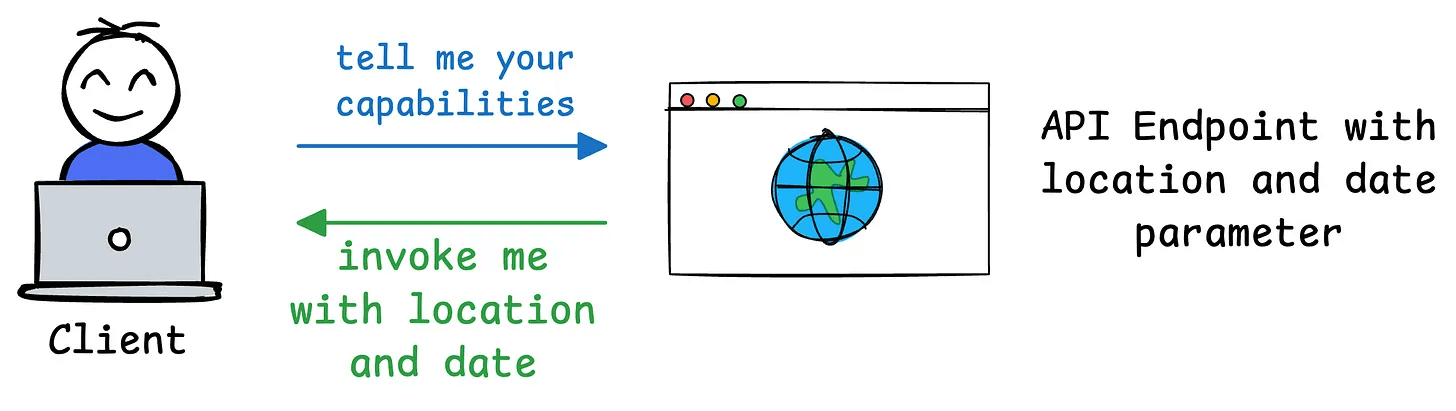

MCP’s design solves this as follows:

MCP introduces a dynamic and flexible approach that contrasts sharply with traditional APIs.

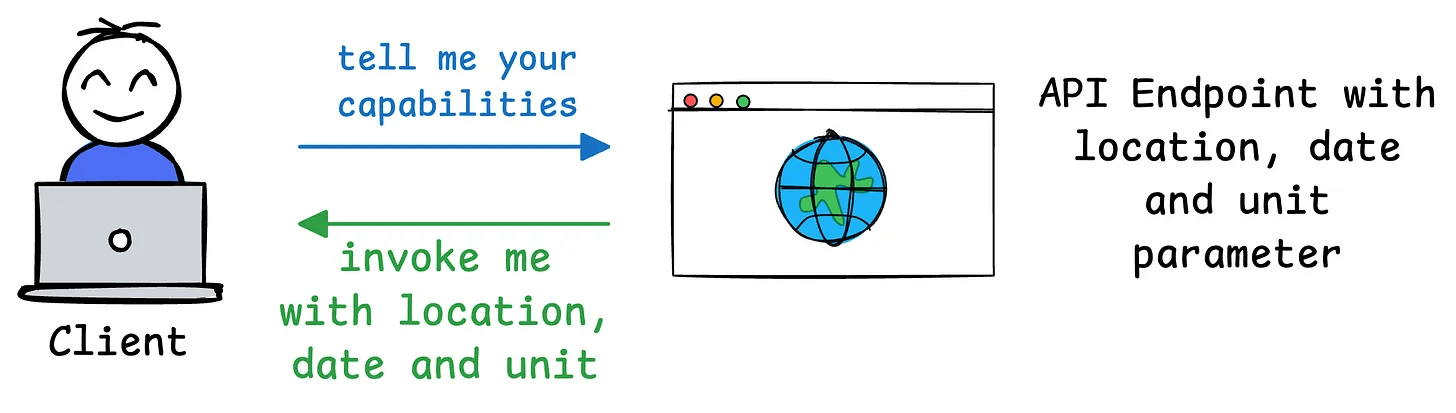

For instance, when a client (e.g., an AI application like Claude Desktop) connects to an MCP server (e.g., your weather service), it sends an initial request to learn the server’s capabilities.

The server responds with details about its available tools, resources, prompts, and parameters. For example, if your weather API initially supports

locationanddate, the server communicates these as part of its capabilities.

If you later add a

unitparameter, the MCP server can dynamically update its capability description during the next exchange. The client doesn’t need to hardcode or predefine the parameters—it simply queries the server’s current capabilities and adapts accordingly.

This way, the client can then adjust its behavior on-the-fly, using the updated capabilities (e.g., including unit in its requests) without needing to rewrite or redeploy code.

We hope this clarifies what MCP does.

In the future, we shall explore creating custom MCP servers and building hands-on demos around them. Stay tuned!

👉 Over to you: Do you think MCP is powerful than a traditional API setup?

Thanks for reading!